Gravity Wins: Collapse of the Monolithic AI Agent

In the early days of SaaS, we built monoliths because they were convenient. You had one codebase, one database, and one deployment pipeline. It felt efficient until the gravity of complexity set in. The codebase became brittle. A single failed dependency brought down the entire application. We learned, painfully, that while a monolith is easy to start, it is impossible to scale.

We are now repeating this history with Artificial Intelligence.

For the last two years, the industry has been obsessed with the “God Model”; the singular, massive Large Language Model (LLM) expected to reason, code, plan, and execute with equal proficiency. This is not engineering; it is hope. And in architecture, hope is a structural liability.

The friction limit has been reached. The “one prompt to rule them all” approach is collapsing under its own probabilistic weight. We are moving away from the era of the Heroic LLM and entering the era of the Systemic Squad. This is the microservices moment for agents.

The Physics of Failure

Why does the monolithic agent fail? It is a matter of entropy.

When you ask a single model to act as a Planner, a Coder, a QA Engineer, and a Documentation writer simultaneously, you are asking it to hold opposing contexts in a highly volatile state. The model must balance the high-level abstraction of the goal with the low-level syntax of the execution.

In physics, a system seeks its lowest energy state. For an LLM, the “lowest energy state” is often a hallucination or a lazy approximation. As the complexity of the prompt increases, the “surface area” for error expands exponentially. You cannot prompt your way out of this physics. Adding more instructions to a context window is like adding more floors to a building with a cracked foundation. Eventually, the load exceeds the bearing capacity, and the structure shears.

We attempted to fix this with “Chain of Thought” and massive context windows. These are band-aids. They treat the symptom; incoherence; rather than the disease, which is structural misalignment.

The Decoupling: From Heroics to Mechanics

The solution is not a smarter model. It is a smarter system. We must kill the hero (the monolithic agent) to build the machine (the multi-agent system).

This mirrors the transition from monolithic software architectures to microservices. In a microservices architecture, we accept that distinct functions require distinct environments, distinct resources, and distinct validation logic. We are now applying this to cognitive labor.

We are shifting to Specialized Multi-Agent Systems. In this paradigm, we do not have one agent trying to “do the job.” We have a squad, orchestrated by rigid logic, where each component has a single, verifiable responsibility.

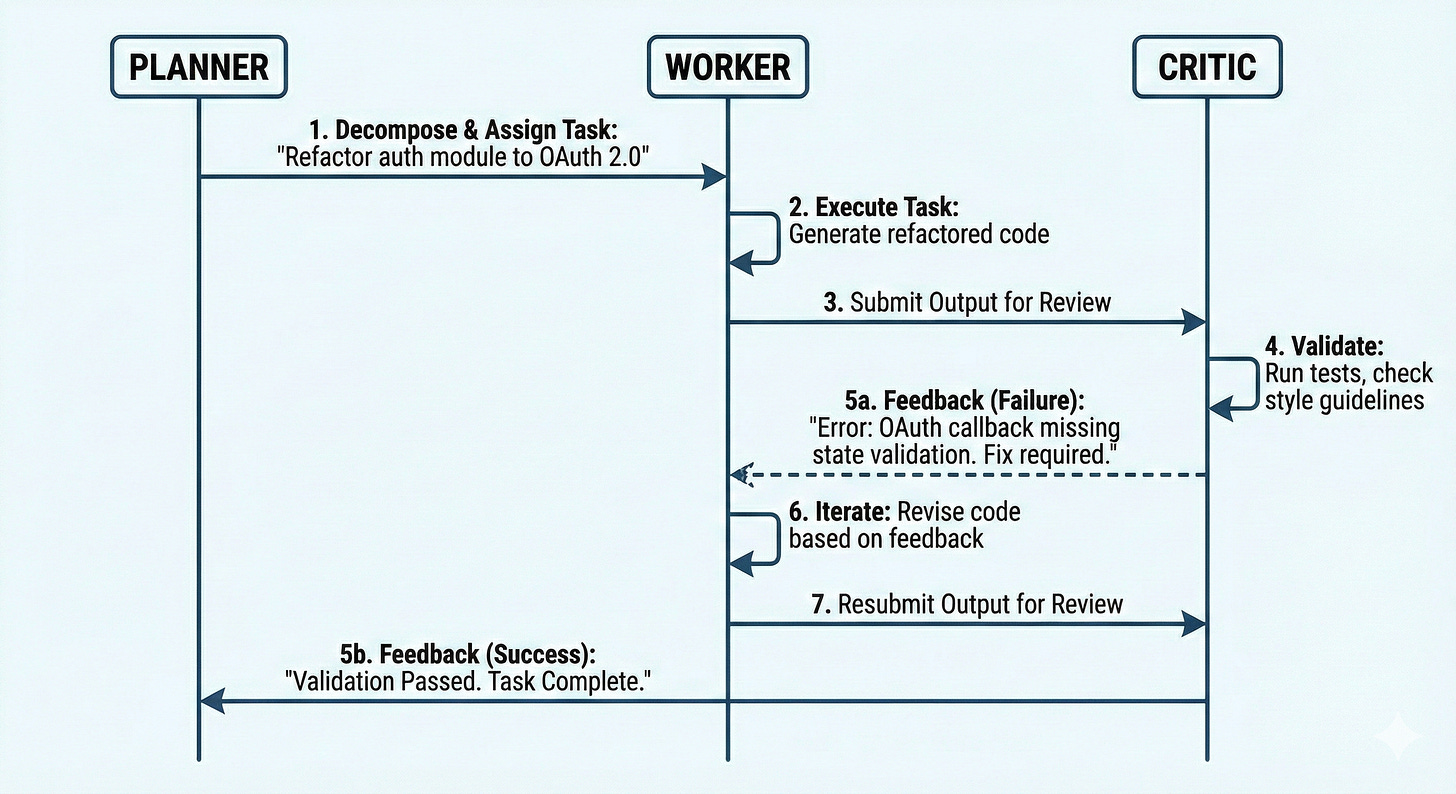

The standard architectural pattern emerging for 2026 involves three distinct functional roles: The Planner, The Worker, and The Critic.

1. The Planner: The Architect

The Planner does not execute. It does not write code. It does not browse the web. Its sole responsibility is Decomposition.

When a complex objective enters the system (e.g., “Refactor the authentication service to use OAuth 2.0”), the Planner’s job is to break this gravity-heavy goal into atomic, manageable units of work. It defines the dependency graph. It establishes the sequence.

The Planner creates the blueprint. It operates at a high level of abstraction, optimizing for logical flow and resource allocation. If the Planner tries to write the code, the system fails. It must remain detached from the weeds to see the forest.

2. The Worker: The Laborer

The Worker is the engine of execution. It is purposely myopic. It does not know why it is doing a task; it only knows how to do it.

It receives a narrow, scoped directive from the Planner (e.g., “Write the interface for the OAuth callback handler”). Because its context is stripped of the broader project complexity, the Worker operates with much higher determinism. The probability of hallucination drops because the “search space” for the answer is constrained.

This is the equivalent of a stateless function in serverless architecture. Input A leads to Output B. Zero drift.

3. The Critic: The Inspector

This is the most critical and most neglected component in the current hype cycle. The Critic is the adversarial force.

In a monolithic setup, the model effectively grades its own homework. It generates code and assumes it is correct. In a multi-agent system, the Critic validates the Worker’s output against the Planner’s constraints.

The Critic does not generate; it analyzes. It runs the compiler. It checks the linter. It verifies that the JSON schema matches the requirement. If the output fails, the Critic rejects the unit of work and sends it back to the Worker with a specific error trace. This creates a feedback loop; a self-correcting mechanism that operates without human intervention.

Orchestration Over Prompting

The shift to this architecture changes how we interface with AI. We stop being “Prompt Engineers” and start being “System Architects.”

In the monolithic era, improvement meant tweaking the prompt; changing adjectives, adding emotional pleas to the model, engaging in voodoo. In the multi-agent era, improvement means Orchestration.

Orchestration is the logic layer that binds the agents together. It is the control flow.

How many retries does the Worker get before the Planner needs to adjust the strategy?

What is the threshold for the Critic to escalate a failure to a human operator?

How is state passed between the Planner and the Worker?

This makes the AI inspectable. If the system fails, you don’t stare at a black box wondering why the “vibes” were off. You look at the logs. Did the Planner fail to decompose the task? Did the Worker hallucinate a library? Did the Critic have a loose validation rule?

You can debug a squad. You can only guess at a monolith.

Testability and Repeatability

The greatest sin of the monolithic LLM is its lack of repeatability. You run the same prompt twice and get different results. In an enterprise environment, this is unacceptable. You cannot build a business on a slot machine.

By breaking the system into microservices, we introduce Unit Testing for Agents.

We can benchmark the Worker agent on specific coding tasks. We can benchmark the Critic on its ability to catch errors. We can swap out the underlying model for the Worker (using a smaller, faster model) while keeping a heavy reasoning model for the Planner. We can optimize for unit economics, latency, and accuracy at the component level.

The Quiet Reality

The noise in the market is about “AGI” and models that feel human. Ignore it. The signal is in the plumbing.

The future of AI deployment is not about a smarter chatbot. It is about a reliable, boring, invisible machine that processes cognitive loads with the same predictability as a database transaction.

We are moving from the age of the artist to the age of the architect. The hero; the one magical prompt; is dead. Long live the system.