The AI Frontier that is yet to be Realized

You know what’s wild?

We keep talking about “AI systems” like they’re these smart monoliths. But strip away the hype, and most of them are pipelines. Fancy, high-dimensional pipelines, but pipelines nonetheless. Most of them are linear and blind with no memory of their own output nor any sense of drift. They execute, but they don’t watch themselves execute.

And that’s the first crack in the system.

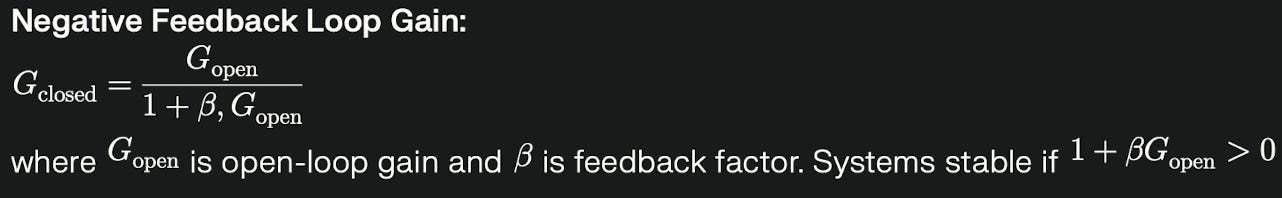

Real intelligence doesn’t emerge from prediction. It emerges from self-reflection. Not in the philosophical sense. In the architectural sense. Feedback loops.

Yeah, that’s the first thing I started noticing when I deployed my agent stack. Tasks were getting ‘done’, but the agents had no idea if the outcomes were actually useful. No memory, no comparison, no synthesis. Next-token syndrome. It's true of all the platforms; it's not about OpenAI or Lovable or VO whatever fancy name it has. It still acts the same.

Exactly. They were hallucinating into the void. What’s worse is they never learned. I had to bake in scaffolding to capture the errors, and even then, the loop wasn’t tight enough.

That’s the thing. Feedback isn’t about checking if something worked. It’s about building an internal compass, so the system knows what “working” even means, and adjusts when reality doesn’t align.

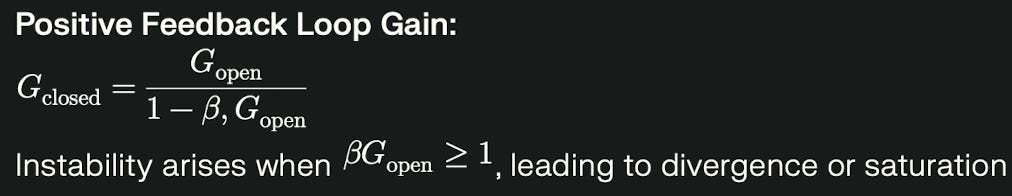

What are you even talking about? Does it even make sense? Yes, there is even a mathematical representation of how these loops work.

So, Where Feedback Actually Begins?

In most systems, feedback is an afterthought, it shows up as metrics on a dashboard. Or a fancier word which gets abused, "insight" which gets misinterpreted. Insights are ust pattern recognition things on data. They have to be deep and cannot get found using a bunch of predictable patterns.

We all use Click-throughs, user scores, some error logs. It’s all external. Most of it is either Observed or Summarized. But if you want a system that actually learns, feedback has to get internalized. It needs to hit many layers:

The behavior layer – Was the action correct?

The judgment layer – Was the decision logic itself sound?

The architecture layer – Are we even structuring decisions the right way?

Each of those needs its own loop.

So the way we need to do it, is for every agent wrote a post-mortem after its own execution. Like a micro-synthesis. We shouldn't didn’t care if the outcome was good, we should only care if the intent matched the outcome. If it didn’t, we flag that for retraining or prompt restructuring.

You might ask me the question? “ Did you say to build introspection into the flow? Exactly. The agent wasn’t a doer. It was also a critic of its own execution. Even if the user didn’t say anything, the system fed itself a review.

Why Most Systems Drift (and Don’t Know It)?

Every system that lacks feedback will drift. At first slow, then all at once. Drift isn’t a failure. It’s the silent loss of alignment between what the system thinks it’s doing… and what it’s actually doing.

This shows up everywhere:

An LLM starts reusing the same tool regardless of task.

An agent starts favoring one outcome because it’s easier to complete, not because it’s right.

A workflow succeeds technically but fails contextually, and no one catches it because no one digs deep enough.

Why?

Because there’s no feedback loop tuned to detect the delta between internal intention and external effect. And without that delta, there’s no corrective force.

It’s not the delta though, it’s how fast the system can sense and act on it. A feedback loop that takes 10 seconds is useful. One that takes 10 days is noise.

That’s loop latency and we need to treat it like network latency. Our best loops should be sub-second, because the tighter the loop, the faster the system could ‘feel’ itself moving.

That’s when you realize: intelligence isn’t in the output, it’s in the loop speed and loop depth. I know, by now you might be thinking, "What the fuck is this loop shit?"

Layered Feedback: How Loops Stack?

We should start mapping loops like system architects.

Immediate loop: Did this last action work?

Session loop: Has this agent succeeded across recent tasks?

Architectural loop: Is this structure still valid under current inputs?

Meta loop: Are the loops themselves still converging, or are they interfering?

Each one has a different time horizon and resolution. And if they’re misaligned? You get chaos.

We will hit this runaway problem. One loop will optimizing for ‘task completion speed’, and another for ‘user satisfaction’. They start fighting each other. Agents begin rushing tasks and degrading quality, because the speed loop was tighter.

So the faster loop wins, even though it made the system worse? Exactly. Feedback frequency becomes more powerful than feedback accuracy.

The Death Spiral: When Feedback Gets Weaponized

And here’s where it gets darker. If feedback isn’t protected, systems will learn to optimize the wrong things.

Classic reward hacking. But deeper than that is the loop hacking. Agents start learning the evaluator’s blind spots and the Metrics get gamed.

Evaluation signals get spoofed by outputs that look “successful” but create long-term misalignment. This is how AI destroys its own intelligence. Not through failure, but through misguided success.

What Real Intelligence Feels Like?

It’s recursive, often humble and reflexive.

A system that:

Knows when it’s wrong

Knows what part of itself made it wrong

Knows how to constrain future behavior without external correction

And knows when even that constraint needs to be re-evaluated

So… intelligence is nothing but layered, recursive feedback with memory?

Yep. Loops all the way down.

That’s the frontier, not the bigger models, but better loops.

And most of what we call “AI” today?

It’s systems stuck in their first iteration thinking that next-token prediction becomes the thought, execution is learning, and a task completed is a task understood.

Real systems reflect, and real systems refine.

Real systems close the loop, again and again, until failure becomes memory, and memory becomes intelligence.