The Kinetic Deficit: Why “Copilots” Failed and Agents Must Execute

The promise of the last cycle was “assistance.” We were sold a vision of the digital sidekick; the Copilot; that would sit in the passenger seat, offering suggestions, drafting emails, and autocompleting code. It was seductive, but structurally flawed.

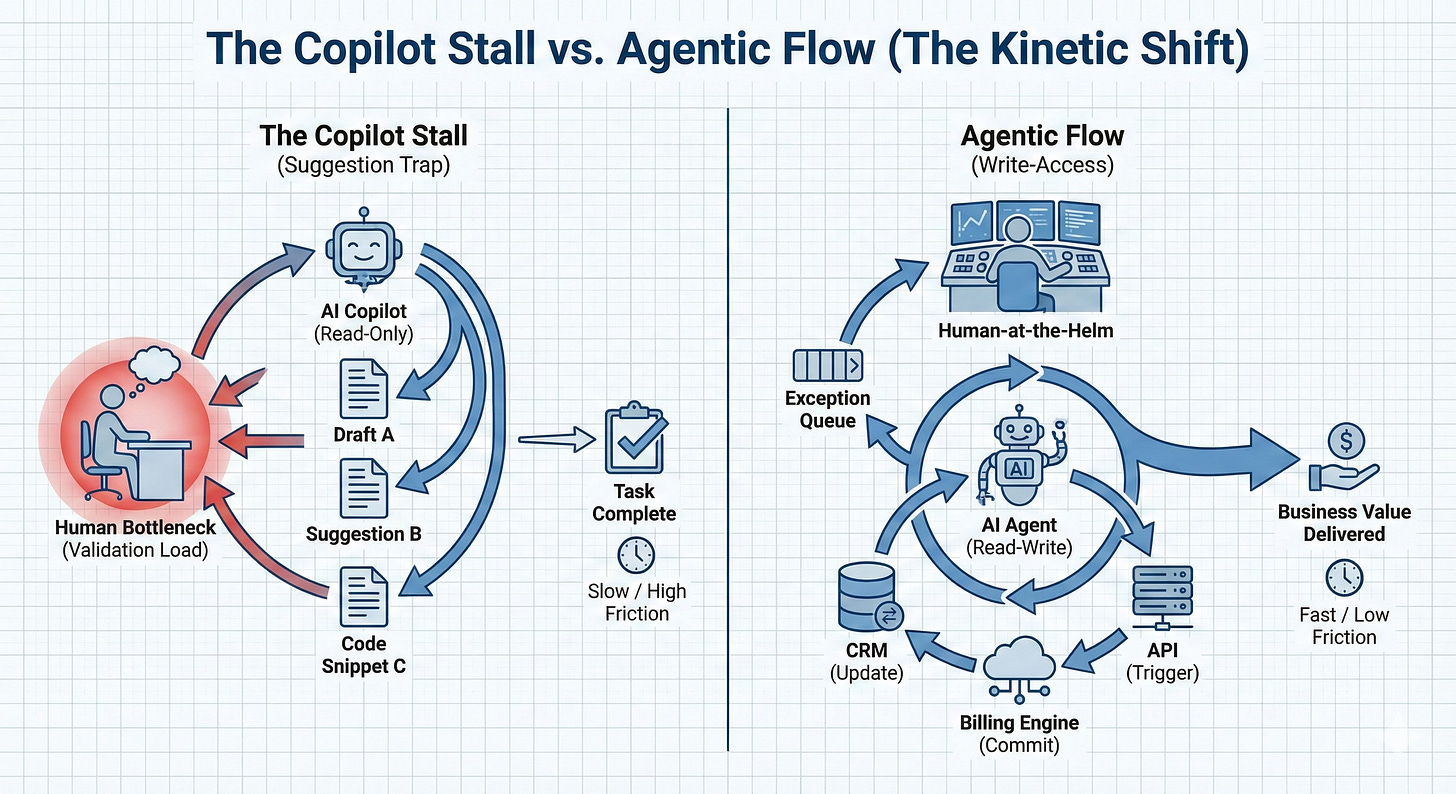

From a systems engineering perspective, the Copilot model introduced a dangerous variable: cognitive friction. By placing the AI in a “suggest-only” mode, we forced the human operator to act as the ultimate validation layer for every single micro-task. The human remained the bottleneck. The AI generated potential energy (drafts, suggestions, code snippets), but it required human kinetic energy to convert that potential into work.

This is not scaling; this is merely increasing the velocity of the input queue while the output valve remains constricted by human bandwidth. The system does not move faster; it just vibrates more violently.

The era of the “suggestion” is over. We are observing a phase shift toward Agentic Labor—systems that possess not just the intelligence to reason, but the permission to act. The transition from “Human-in-the-loop” to “Human-at-the-helm” is not a change in interface; it is a fundamental restructuring of the operational physics of the enterprise.

The Physics of the “Suggestion Trap”

To understand why the Copilot model stalled, we must look at the thermodynamics of work.

In a traditional workflow, a task is a vector requiring a specific magnitude of force to move from Point A (Incomplete) to Point B (Complete).

Manual Labor: The human supplies 100% of the force.

Copilot Assistance: The AI supplies 80% of the content, but the human must supply the validation force to verify accuracy, context, and tone.

The industry underestimated the “Load of Validation.” Reviewing a mediocre draft often consumes more cognitive fuel than writing a good one from scratch. This is the “Suggestion Trap.” When an AI suggests a response, it transfers the burden of judgment to the human. Judgment is biologically expensive. It requires context switching, recall, and decision-making.

If your “efficiency tool” requires you to read, edit, and approve every output, you haven’t built a machine; you’ve just hired a very fast, hallucination-prone intern who demands your constant attention. You are still in the loop. You are still the gear that grinds against the metal.

True scale requires the removal of the human from the critical path of execution. It requires a system where the default state is action, not proposal.

Write-Access: The Kinetic Shift

The definition of Agentic Labor is simple: Write-Access.

An agent becomes an agent only when it has the keys to the database, the API tokens for the CRM, and the authority to commit changes to the repo. Until an AI can mutate the state of a system, it is merely a fancy search engine.

This shift is terrifying to the risk-averse, but necessary for the systems thinker. We are moving from “Read-Only” intelligence to “Read-Write” execution.

Consider the operational architecture of a billing reconciliation process:

→ The Copilot Way: The AI analyzes the ledger, identifies a discrepancy, and types a message to the human: “It looks like Invoice #409 is unpaid. Should I draft an email?” The human must read, verify, click “Yes,” review the draft, and click “Send.” The human is the fuel.

→ The Agentic Way: The AI analyzes the ledger, identifies the discrepancy, checks the “safe-action” protocols, queries the CRM for the contact, triggers the Stripe API to re-attempt the charge, and logs the action in the ERP. The human receives a weekly digest: “7 discrepancies resolved. 0 exceptions requiring intervention.”

In the second scenario, the system has structural integrity. It runs on its own internal combustion. The human is not fueling the engine; the human is designing the engine.

The Architecture of the Digital Labor Layer

We are not simply deploying chatbots; we are layering a digital workforce over the existing chaotic infrastructure of SaaS sprawl. This is the Digital Labor Layer.

This layer sits between the raw data (the “Truth”) and the business outcome (the “Value”). Previously, this space was occupied by humans gluing together disparate APIs with spreadsheets and Slack messages. Now, agents occupy this space.

To build this effectively, we must abandon the “chat” interface as the primary mode of interaction. Chat is for humans. Agents do not need to chat; they need to integrate.

1. The Protocol of Permission

The fear of “Write-Access” is solved through rigid permission architecture, not human micromanagement. We define the “Bounded Context” within which the agent operates.

Low Risk: Updating CRM fields, categorizing tickets, scheduling meetings. (Auto-Execute).

Medium Risk: Sending external emails, deploying code to staging. (Execute with time-delayed veto).

High Risk: Deploying to production, transferring funds. (Draft and Await Approval).

By codifying these rules, we create a “Physics of Permission.” The agent flows like water through the path of least resistance (low risk), only stopping when it hits a dam (high risk).

2. The Systemic Loop vs. The Heroic Intervention

In the old world, when a process broke, a “hero” jumped in to fix it. A customer support rep expedited a ticket; an engineer patched a server at 3 AM.

In the Agentic world, we kill the hero.

The agent handles the 80% of predictable logic; the standard “gravity” of business operations. When it encounters an edge case (an anomaly in the data, a sentiment spike in a customer email), it does not guess. It routes the exception to the human.

This is the shift to “Human-at-the-Helm.” The human is no longer rowing the boat. The human is watching the dashboard, monitoring the pressure gauges, and handling the structural failures that the machine cannot self-correct.

The Death of “Drift”

One of the most insidious forces in business is “drift”—the slow erosion of standards due to human fatigue.

A human forgets to update the CRM because they are tired.

A human skips the unit test because they are rushing.

A human ignores the follow-up email because it got buried.

Agents do not drift. They do not get bored. They do not suffer from decision fatigue. If the protocol states that every lead must be qualified against 12 data points, the agent will do it for the 1st lead and the 10,000th lead with identical precision.

This is the “Zero Drift” standard. By offloading the execution to the Digital Labor Layer, we ensure that the system operates at peak integrity 24/7. The “vanity” of busy-work disappears. We are left only with the brutal reality of the system’s output.

Conclusion: The Architect’s Mandate

The transition from Suggestion to Execution is not about buying better software; it is about accepting a new philosophy of work.

Stop asking, “How can AI help me write this faster?”

Start asking, “Why am I writing this at all?”

If the logic is predictable, it belongs to the agent. If the task requires write-access to complete, grant it; within the bounds of a secured architecture.

The goal is not to have a faster horse (Copilot). The goal is to build a car (Agentic Layer) so that you can stop running alongside the beast and finally sit in the driver’s seat.

The work stops for the blood. The machine handles the rest.

Hey, great read as always. Your point about the 'kinetic deficit' perfecly captures why the old copilot model never truly scaled. It's not just a tech problem, but a human trust one too, I think.