The Weight of Rust: The Structural Correction of 2026

Gravity is the only law that never sleeps. It waits for the structure to exceed its load-bearing capacity, and then it corrects the error.

For the last two years, the B2B SaaS industry has been engaged in an architectural fallacy. We spent 2024 and 2025 bolting gargantuan AI appendages onto chassis that were never designed to hold them. We measured success by the number of new protrusions; the chatbot, the summarizer, the generative interface; confusing mass with muscle. We called it innovation. It was actually drift.

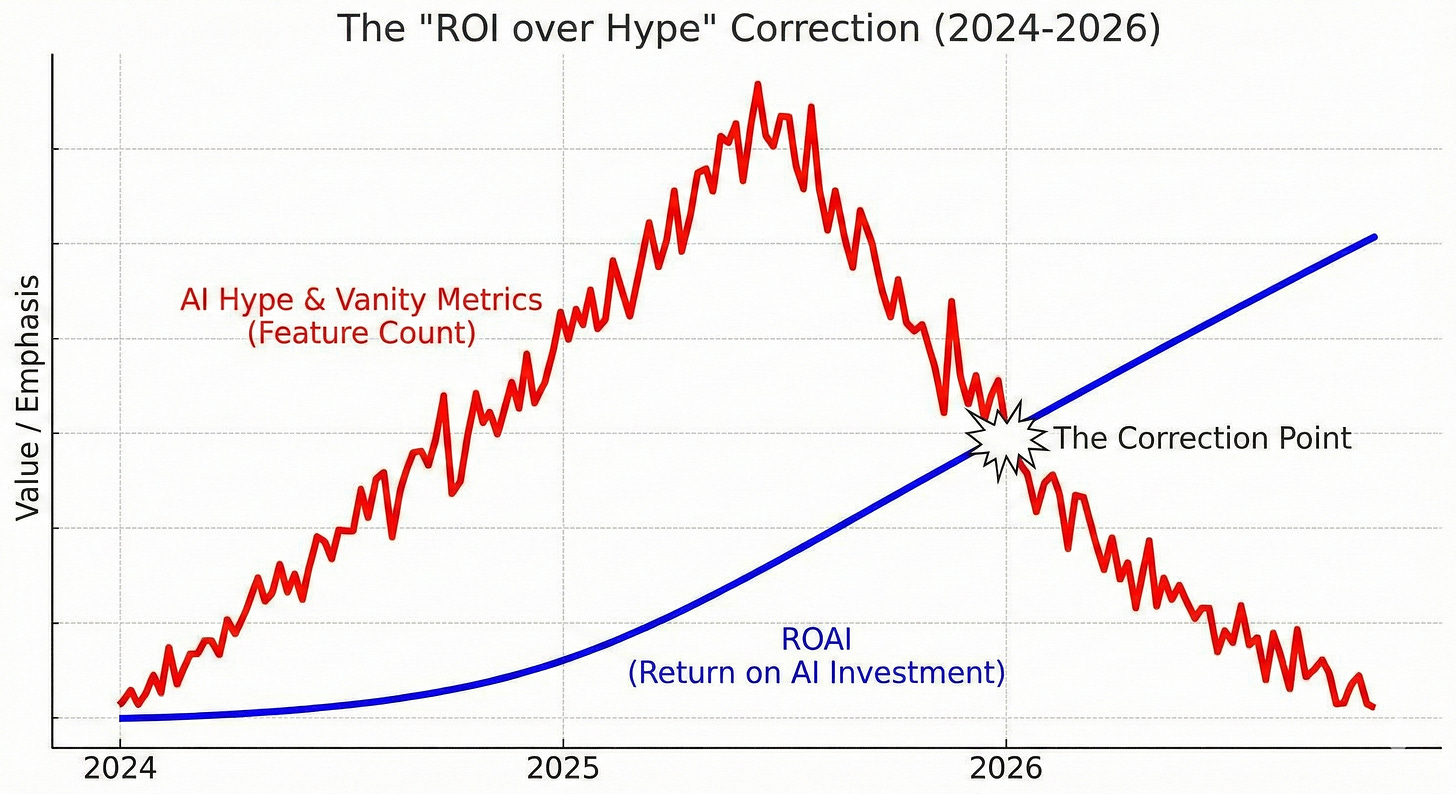

Now, the bill for that drift has arrived. The “AI Hype” cycle has collided with the immutable physics of the P&L. The vanity metrics that sustained the previous cycles; feature velocity, model count, demo flash; have rusted over. In 2026, the boardrooms are no longer asking what AI can do; they are demanding to know what it returns.

This is the “ROI over Hype” Correction. It is not a pivot; it is a structural audit. We are stripping the machine down to the studs. If an AI component does not act as a load-bearing pillar; shortening the release cycle, compressing the customer acquisition cost (CAC), or chemically altering retention; it is not a feature. It is rot. And the rot must be cut.

The Architecture of Vanity

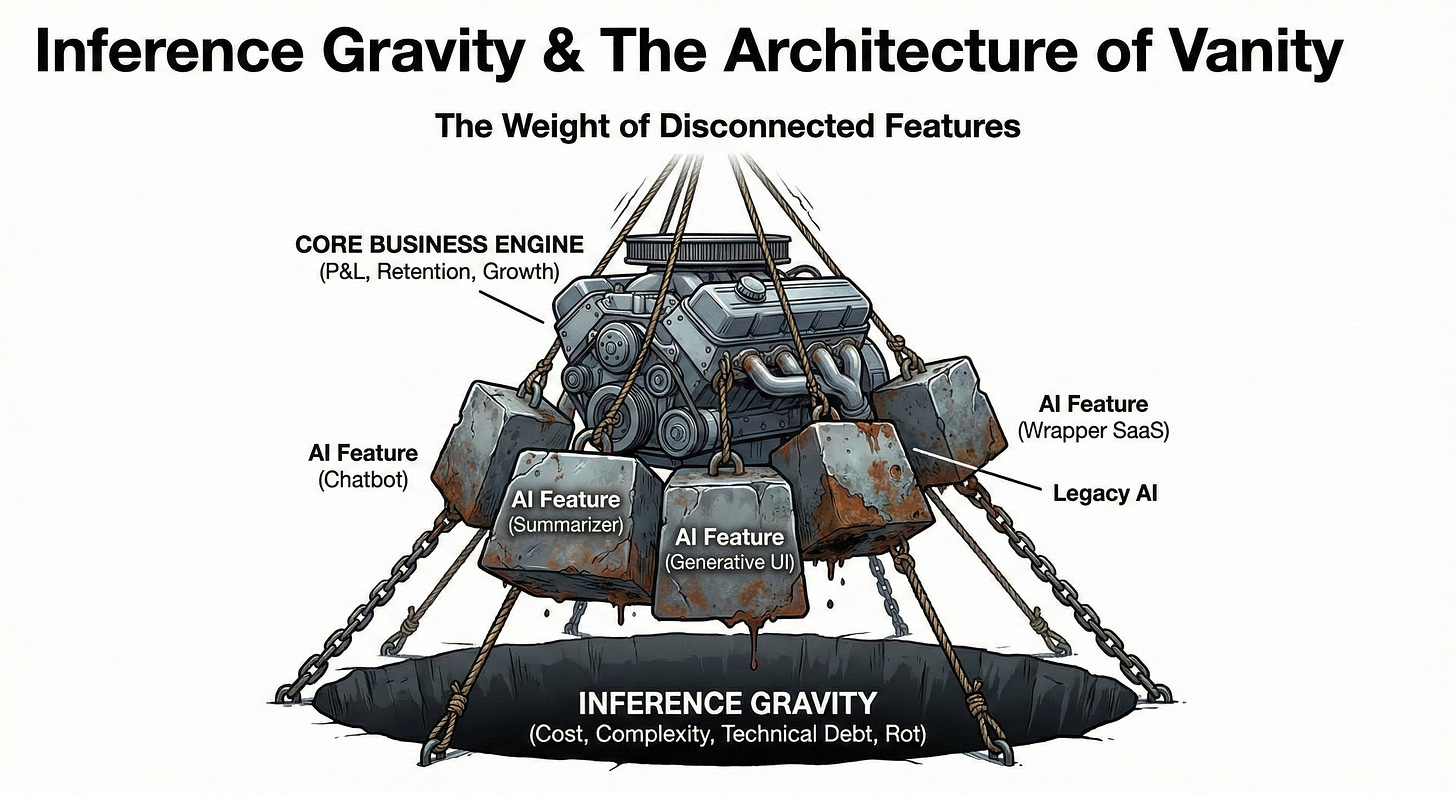

To understand the correction, we must first analyze the failure of the previous system.

In 2024, the prevailing strategy was additive. The logic was linear and flawed: More AI features equal higher valuation. Founders and Product Managers operated under the delusion that AI was a garnish; a layer of magic dust that could be sprinkled over a mediocre product to hide its lack of Product-Market Fit (PMF).

We saw the proliferation of “Wrapper SaaS”; thin UI layers over commoditized models; and the bloating of established platforms with hallucinations of utility. This created a massive accumulation of technical and operational debt. Every new AI feature introduced a new vector of “inference gravity.”

Inference Gravity is the cost that accumulates not just in compute, but in complexity. Every token generated carries a micro-cost, but every hallucination carries a macro-liability. By late 2025, companies found themselves trapped in high-friction loops. They were spending millions on inference costs for features that users engaged with once, found amusing, and then abandoned.

The system was running hot, burning capital to generate heat, not motion. The “Heroics” of the engineering teams keeping these fragile pipelines alive masked the reality: the core engine was stalling.

The vanity metrics of 2024; ”AI-powered,” “GenAI native”; are now red flags. They signal a lack of focus. They suggest a company that is scattering its fuel rather than directing it into the combustion chamber.

The ROAI Mandate (Return on AI Investment)

The correction of 2026 is driven by a single, ruthless metric: ROAI.

Boards have stopped funding science projects. The era of “exploratory AI budgets” is closed. The capital environment has shifted from growth-at-all-costs to efficiency-at-all-costs. In this environment, AI is treated as an asset class that must justify its existence on the balance sheet.

ROAI is not a fuzzy sentiment. It is a verifiable equation. It asks: For every dollar of inference cost and engineering time invested in this model, how many dollars of operational friction did we remove?

If you implement an AI agent for customer support, the metric is not “customer satisfaction.” The metric is “headcount deflection.” Did the agent allow you to scale support volume by 3x without hiring a single new human? If yes, that is ROAI. If no; if you still need a human in the loop to clean up the AI’s mess; then you have merely added a layer of cost. You have increased the gravity without increasing the lift.

This demand for verifiable outcomes is forcing a purge. We are seeing the mass deprecation of “nice-to-have” AI. The summarization tool that nobody reads? Deprecated. The image generator that doesn’t drive conversion? Killed.

This is the “Quiet Heavyweight” approach to product management. It is skeptical, unflinching, and indifferent to hype. It looks at the dashboard and sees only two colors: the black of profit and the red of waste.

The Rise of the AI Gateway: Centralizing Control

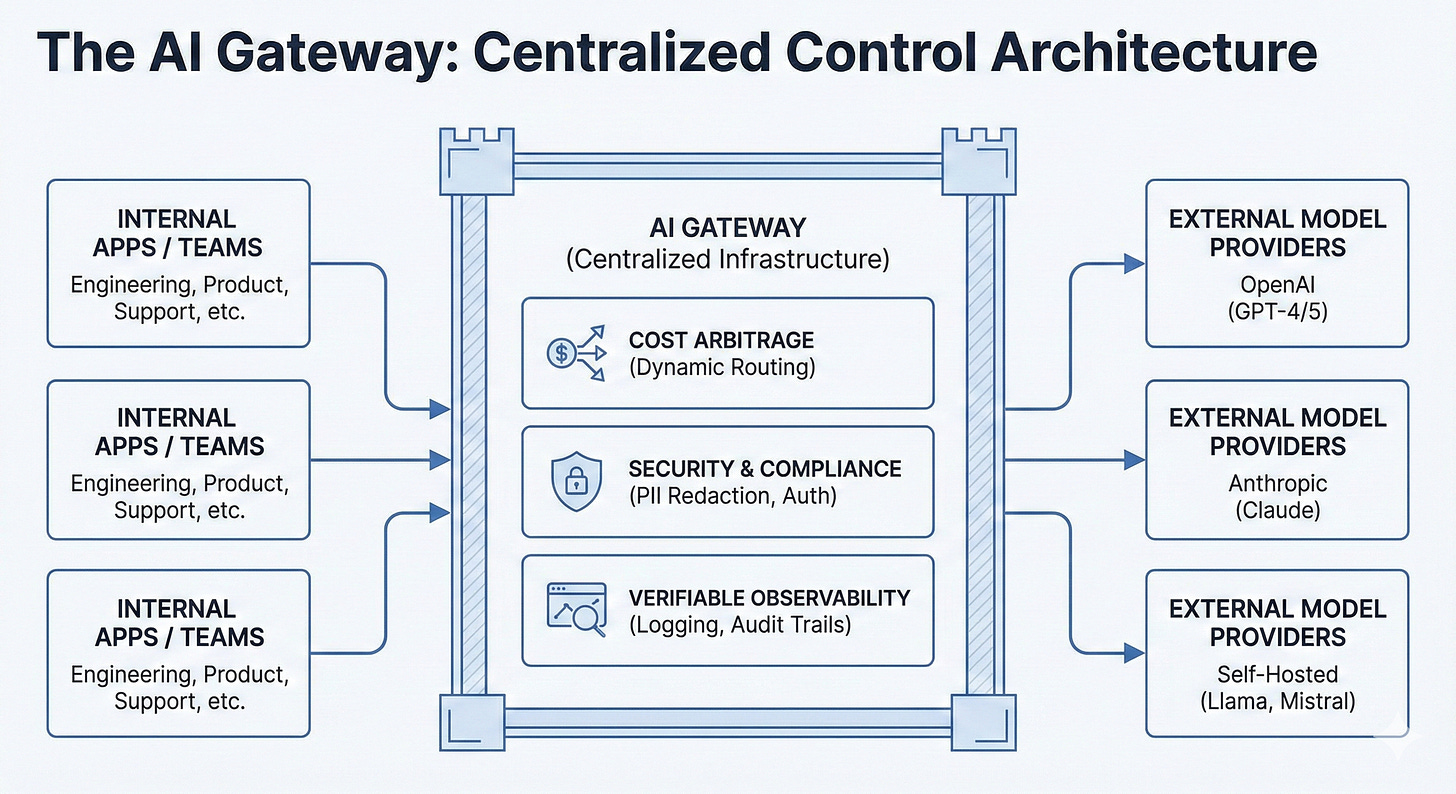

As we purge the rot, we are also hardening the infrastructure. The scattered, ad-hoc API calls of the past; where every engineering team spun up their own OpenAI or Anthropic instances; are being shut down. They represent a security hole and a financial leak.

In their place, we are seeing the rise of the AI Gateway.

The AI Gateway is the new load-bearing wall of the enterprise stack. It is a centralized infrastructure layer that sits between the internal applications and the external model providers. It serves three critical functions:

→ Cost Arbitrage: The Gateway routes prompts to the most efficient model for the specific task. Does this query require the reasoning depth of a GPT-5 class model, or can it be handled by a cheaper, faster Llama instance? The Gateway decides, dynamically managing the “inference gravity” to ensure the lowest possible cost per outcome.

→ Security and Compliance: It acts as the airlock. No data leaves the organization without passing through the Gateway’s sanitization protocols. PII (Personally Identifiable Information) is redacted automatically. The systemic risk of data leakage is contained at the source.

→ Verifiable Observability: The Gateway provides the truth. It logs every input and every output, allowing the organization to audit the quality of the AI’s decisions. It turns the “black box” of LLMs into a transparent system where errors can be traced, diagnosed, and engineered out.

This centralization is not about bureaucracy; it is about leverage. By funneling all AI operations through a single, fortified chokepoint, the organization gains control over the physics of its own data. It stops bleeding energy.

The System vs. The Hero

This transition from hype to ROI mirrors the broader philosophical shift from “Heroics” to “Systems.”

In the hype phase, we relied on heroes. We relied on the prompt engineer who knew the magic incantation to get the model to work. We relied on the product visionary who claimed this feature would change the world.

But heroes are not scalable. They are points of failure.

The “Machine” we are building in 2026 requires no heroes. It requires robust, predictable systems. The AI must be a reliable cog in the larger mechanism of the business. It must work 4 hours a week or 400 hours a week with equal fidelity, without complaint, and without drift.

If the AI requires constant babysitting—if it requires a “human in the loop” to verify every output—it is not a system. It is a hobby. And in a B2B SaaS environment, hobbies are fatal.

We are looking for the “Zero Drift” implementation of AI. This means the AI performs a specific, narrow task with absolute integrity, thousands of times a day. It parses the invoice. It categorizes the ticket. It generates the unit test. It does not write poetry. It does not chat. It executes.

Cutting the Rot to Save the Engine

The most difficult part of this correction is the psychological weight of “sunk cost.” Founders and operators look at the features they spent 18 months building—the features that were supposed to be the future—and they hesitate to cut them.

They worry about the “optical hit.” They worry that removing AI features will make them look regressive.

This is vanity speaking.

The seasoned operator knows that structural integrity comes from subtraction, not addition. A bridge is not stronger because you added more steel; it is stronger because you placed the steel exactly where the load demanded it, and removed everything else that was just dead weight.

To save the engine, we must cut the rot. We must look at our product roadmaps with cold, surgical eyes.

Does this feature shorten the release cycle?

Does it lower the churn rate?

Does it reduce the cost of goods sold (COGS)?

If the answer is ambiguous, the feature is gone.

This is not a retreat. It is a consolidation of power. By removing the distraction of low-value AI, we free up resources to double down on the high-value use cases that actually drive the business forward. We stop trying to do everything and start trying to do the right thing with overwhelming force.

The Quiet Heavyweight

The companies that emerge as leaders in 2026 will not be the ones with the loudest marketing or the most features. They will be the ones with the quietest, most efficient engines.

They will be the “Quiet Heavyweights.” They will operate with subdued authority, ignoring the sensationalism of the consumer AI market. They will not promise AGI (Artificial General Intelligence). They will promise—and deliver—a 14% reduction in operational overhead and a 20% increase in engineering velocity.

They will treat AI not as a magic trick, but as a utility—like electricity or bandwidth. It is there to do work. It is there to overcome friction.

The hype is over. The party has dispersed. The floor is covered in confetti and empty bottles. Now comes the cleanup. Now comes the real work.

Build the machine. Kill the hero. Watch the ROI.

Exceptional framing of the shift happening rn. The inference gravity concept is spot on. I've been watching companies realize their AI features cost more in babysitting time than they save in automation. The Gateway architecture you describe reminds me of how caching layers became standard once eveyrone figured out databases couldn't handle raw traffic at scael.